MyDreamerv2

A reimplementation and extension of DreamerV2 with exploration via Plan2Explore and selected improvements from DreamerV3

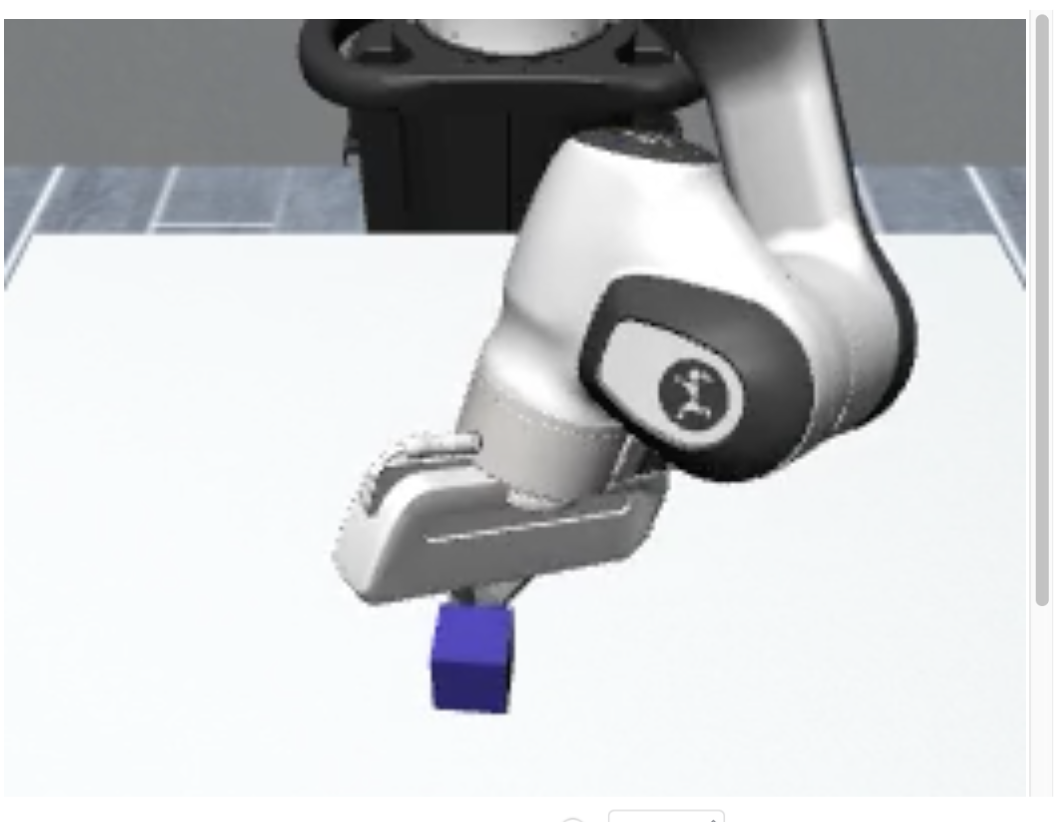

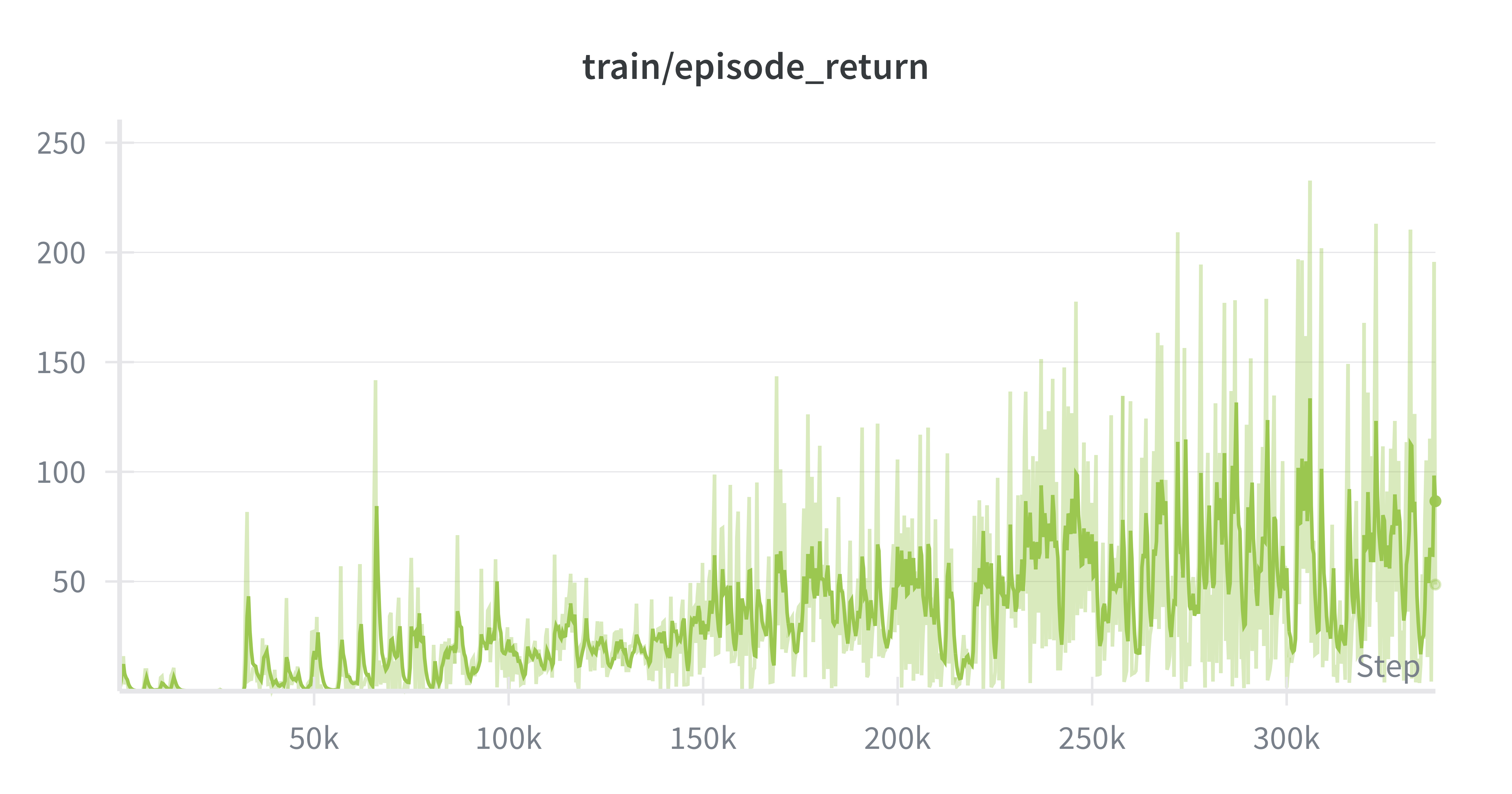

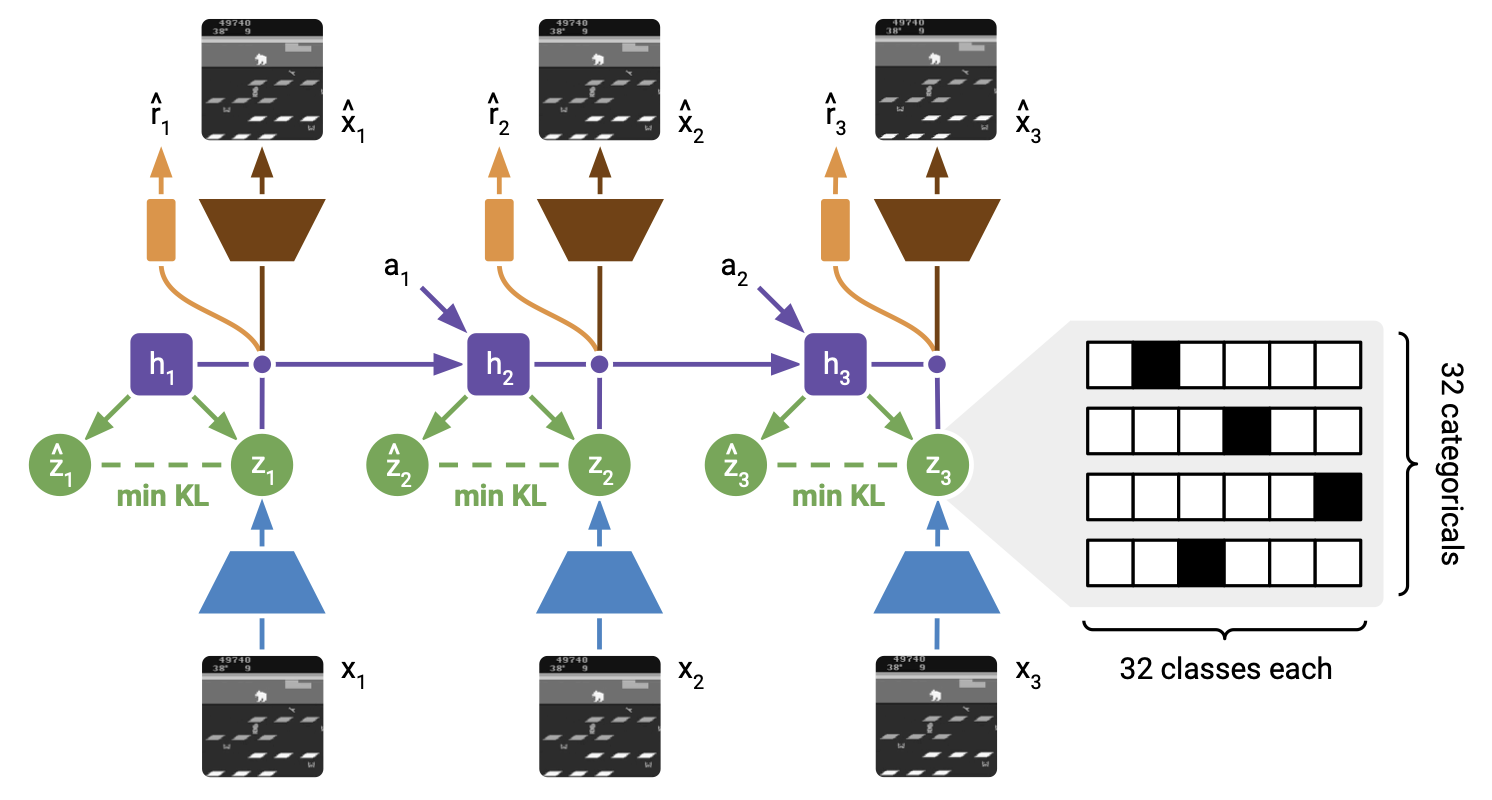

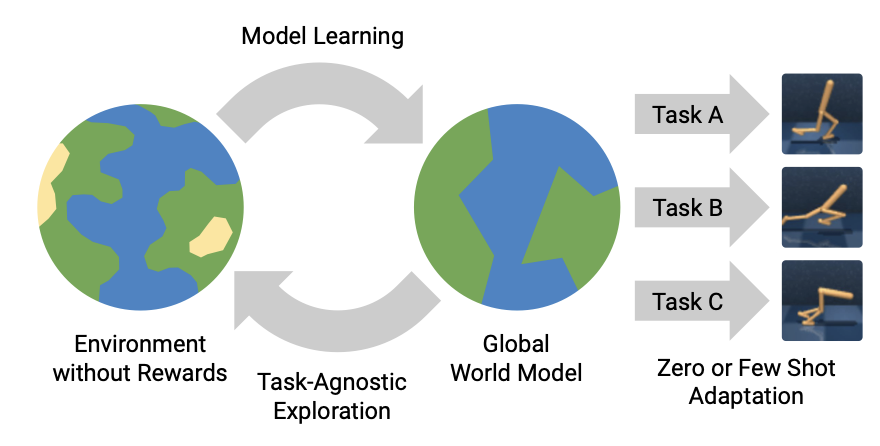

MyDreamerV2 is a PyTorch reimplementation of DreamerV2 that focuses on understanding and extending world-model–based reinforcement learning. The project includes a faithful reproduction of the original DreamerV2 pipeline, an explicit implementation of Plan2Explore for intrinsic motivation, and selected architectural and training improvements inspired by DreamerV3.

Beyond reproducing the baseline DreamerV2 results, this implementation emphasizes exploration through uncertainty. Plan2Explore is implemented on top of the learned latent dynamics, encouraging the agent to seek trajectories where the world model is uncertain.

</div>

For implementation details, design choices, and experimental results, see the full repository:

https://github.com/PedroTajia/MyDreamerV2